While attending a Hot Interconnects talk on supercomputing, I got the following idea. The TOP500 site provides graphs of the number of systems and total performance per interconnect family, which shows an approximate measure of the popularity of the different interconnects. But how do they affect the performance of an individual system? Clearly, a high-performance interconnect should result in higher efficiency than a commodity one. But by how much? And which systems would use what type of interconnect?

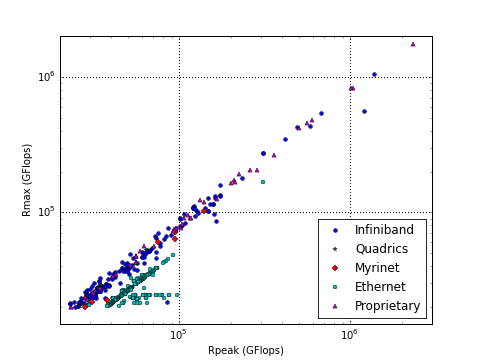

For the following set of graphs, I downloaded the XML file for the current TOP500 list (since updated to the November 2009 list). I decided to look at each system’s LINPACK performance (Rmax) compared with its peak performance (Rpeak) which is what you get when adding the theoretical performance of all processor cores in the system. The result should be a measure of how efficiently the system’s total computational power can be used, which is mostly a function of the interconnect (also software, but for a TOP500 listing we would expect that to be rather good!).

So here is the graph:

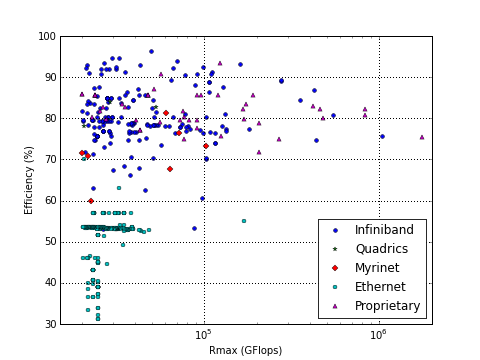

Here’s the same data but this time efficiency (Rmax/Rpeak) is plotted directly:

For top-of-the-list machines, Infiniband and proprietary interconnects (mainly BlueGene, Cray and SGI’s NUMAlink) are the most common. Their efficiency is rather similar at 75-80% for the large machines, and up to 95% for smaller ones. The main alternative interconnection technology, Ethernet, only starts at to be in common use from system #98 onwards. Clearly, the efficiency of these systems is significantly less, at some 55%.

Two main outliers to this theory can be seen. System #5 uses Infiniband but manages to get only a 46% efficiency. This is the Tianhe-1 cluster located in Tianjin, China. It consists of 5120 ATI Radeon HD 4870 video cards, in addition to a number of Intel Xeon CPUs. I would guess that the software for this rather radical new architecture (especially at this scale!) is not yet fully up-to-date, and that this system should get a boost in Rmax by the time of the next TOP500 list next June.

The other exception is #486, again a Chinese system, which uses a 10-Gbit Ethernet network (rather than 1-Gb Ethernet for the others – at least, as far as I could tell from parsing the list). Clearly, the faster Ethernet technology significantly improves efficiency: at 70% it comes much closer to the Inifiband and proprietary-interconnect powered systems. When compared to 1GbE, the difference between 10GbE and Infiniband is minimal though, and also the price comes pretty close. So I guess, in the end, you do get what you pay for…